Weeks 1/2

Proposal

Proposal

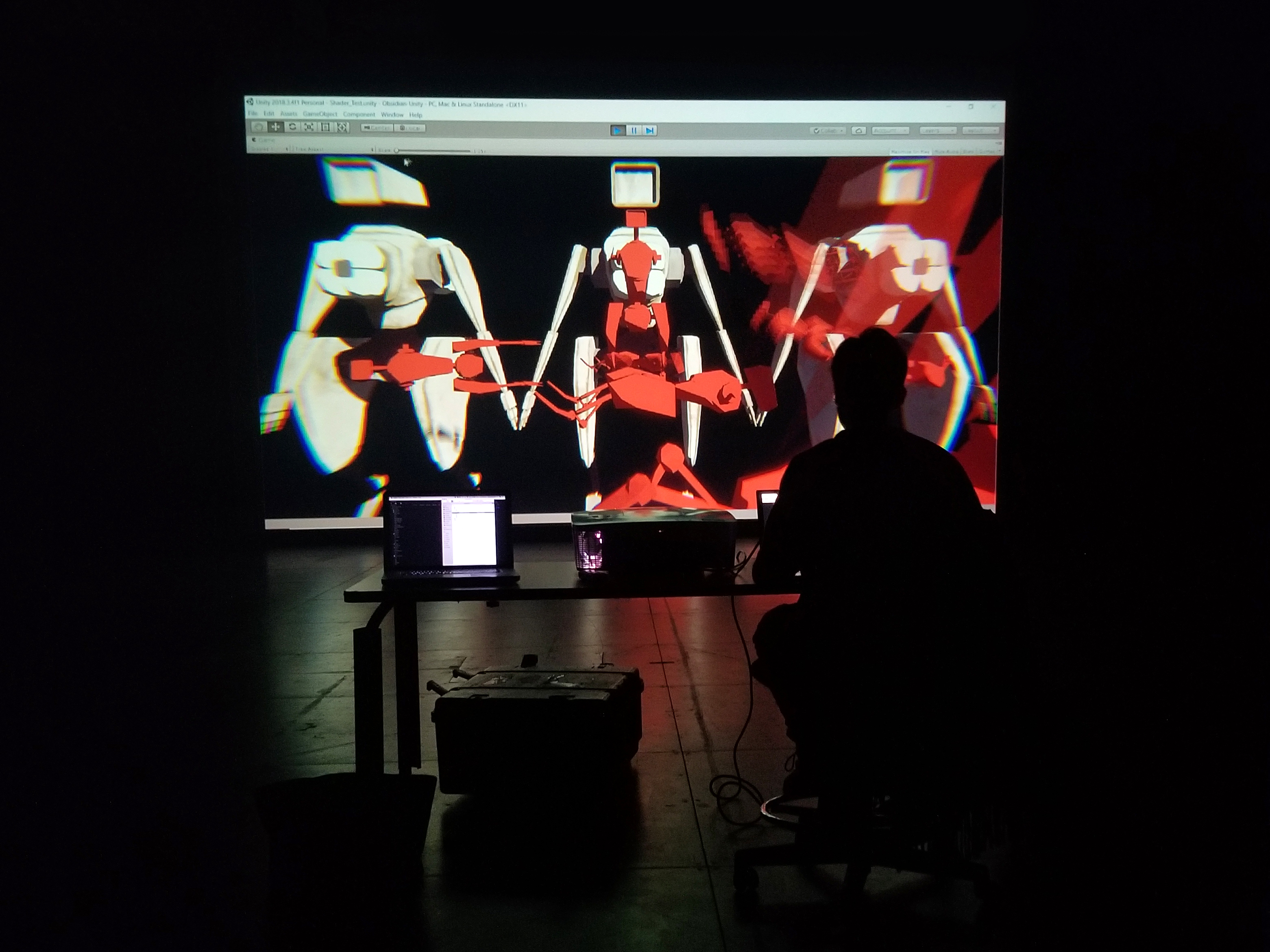

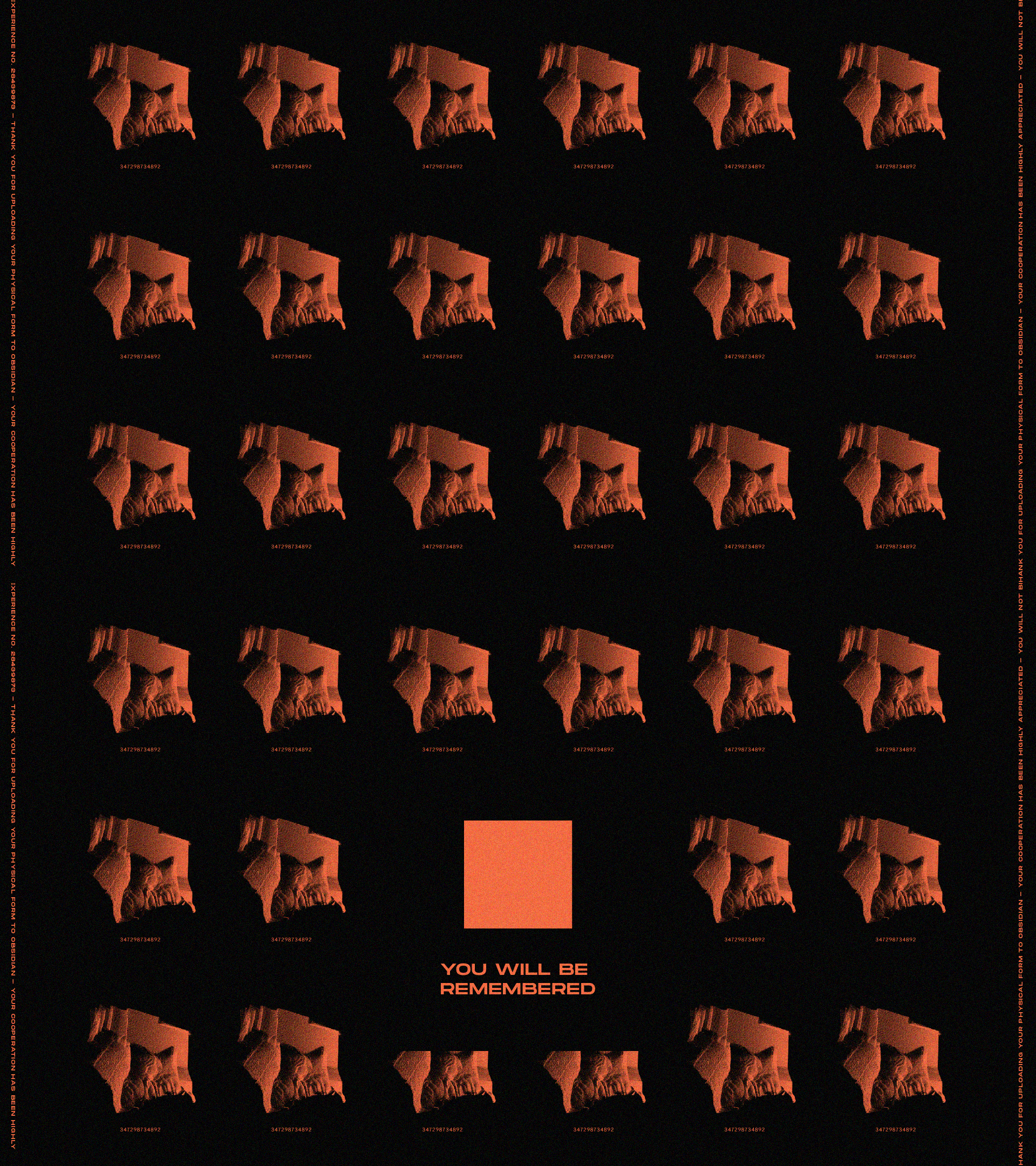

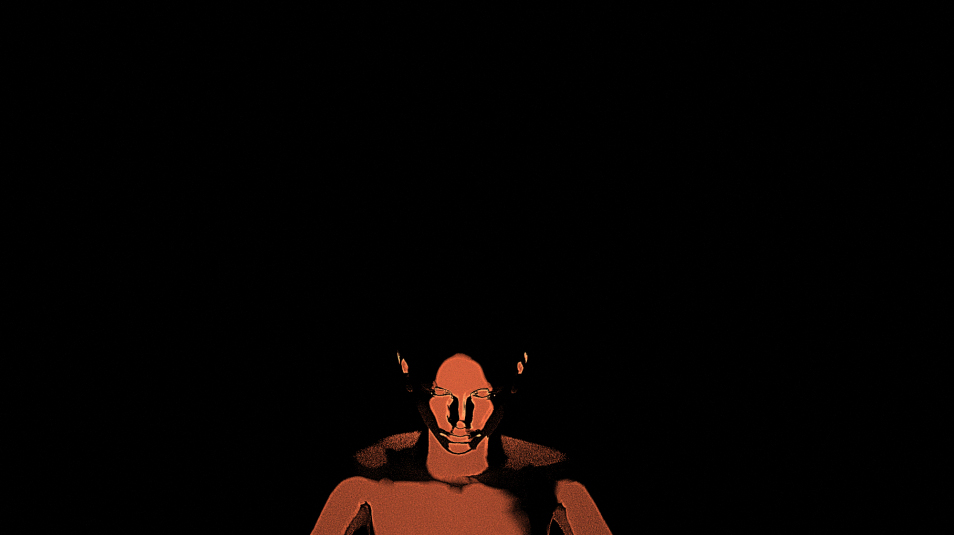

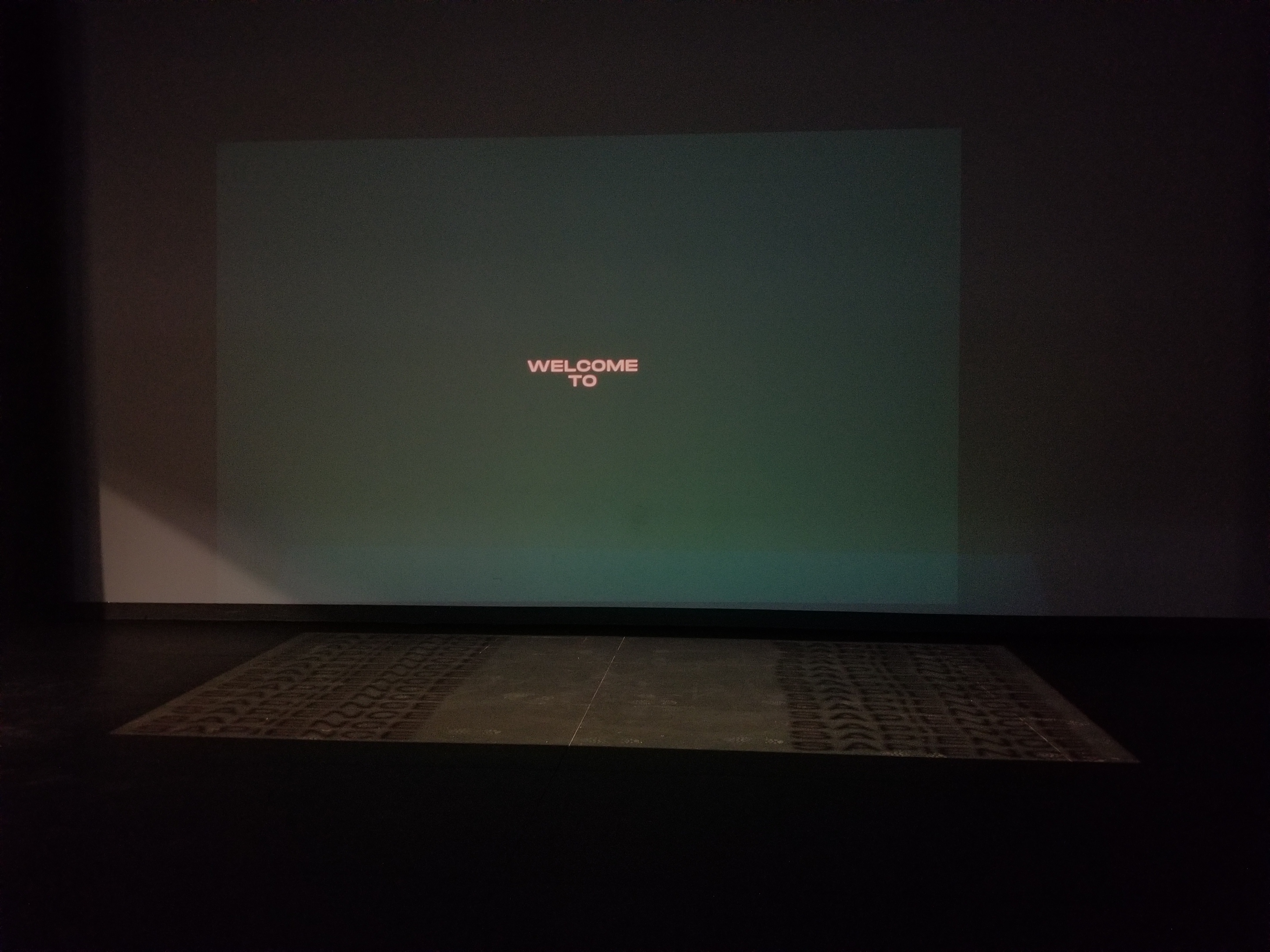

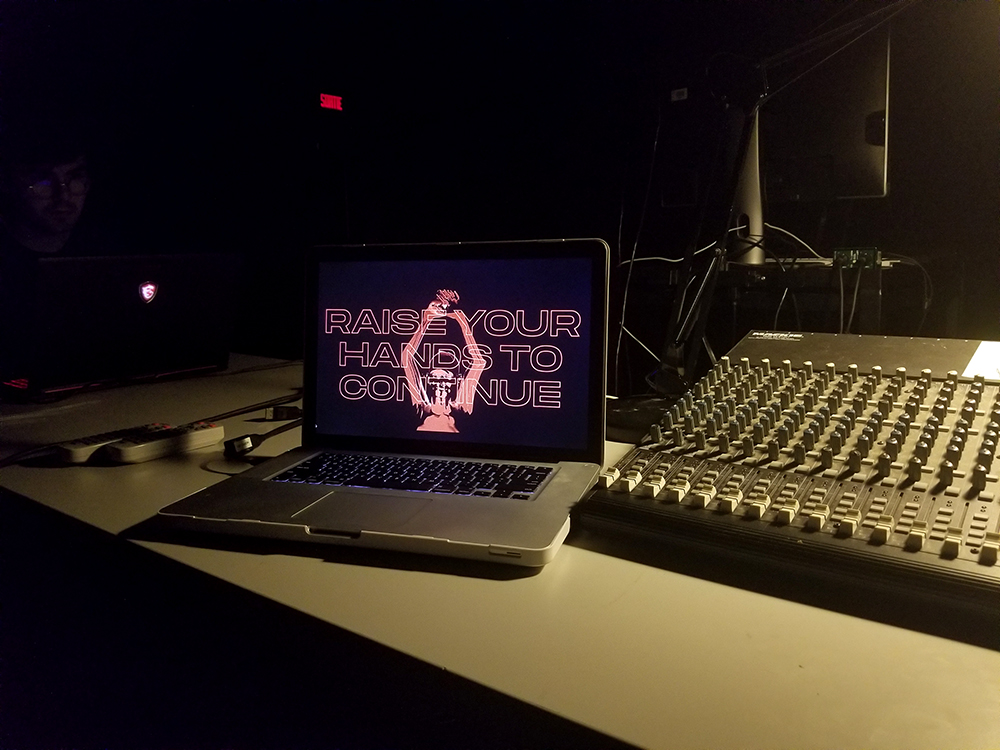

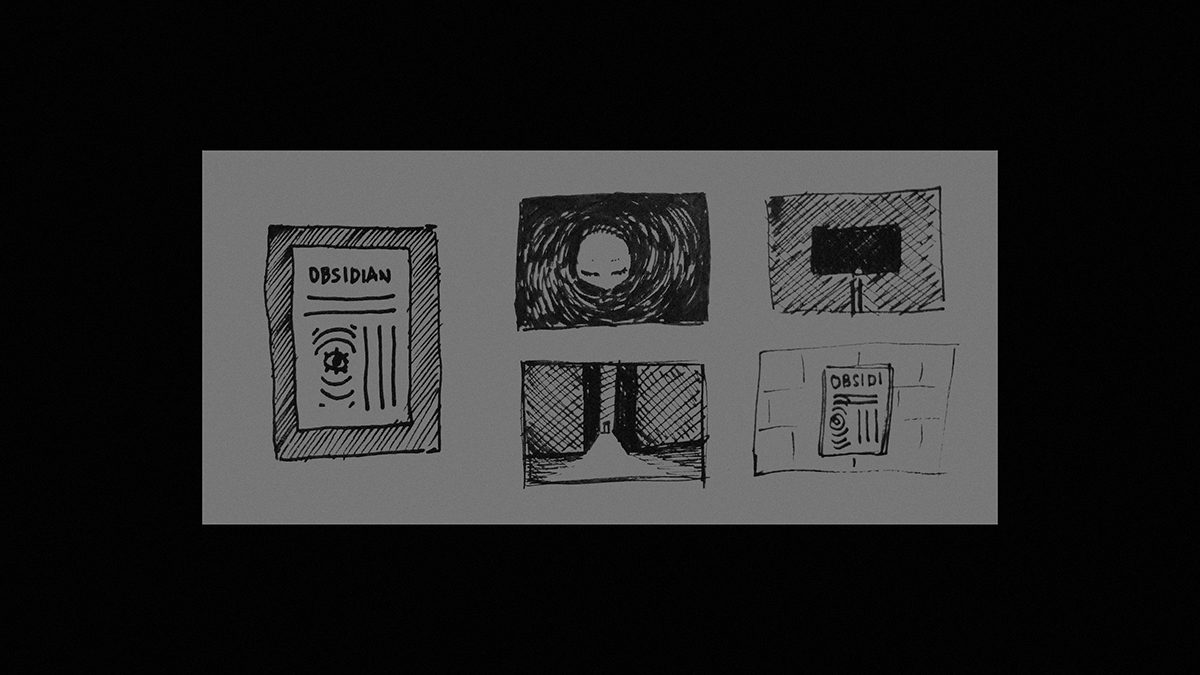

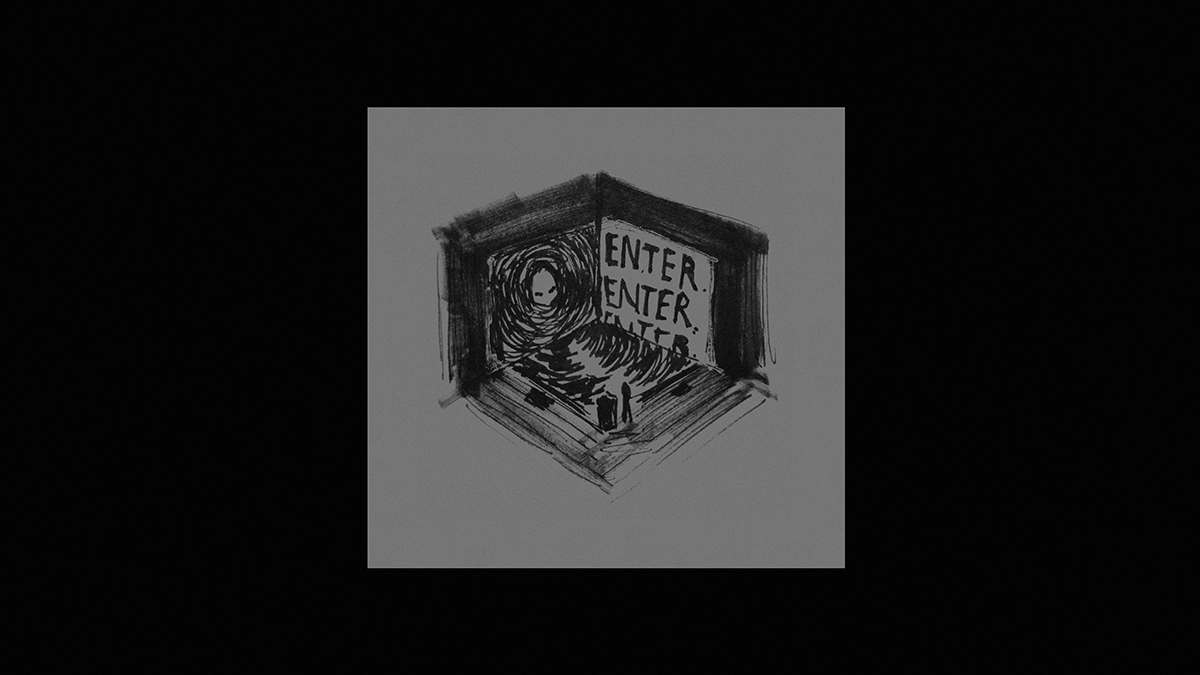

Obsidian was presented as a large-scale narrative-based projection mapping experience that would envelope a room. We pictured it as an interactive movie with the crowd as participants.

Premise

→ Large scale projection mapping experience

→ Narrative-based

→ Movie with crowd as participants

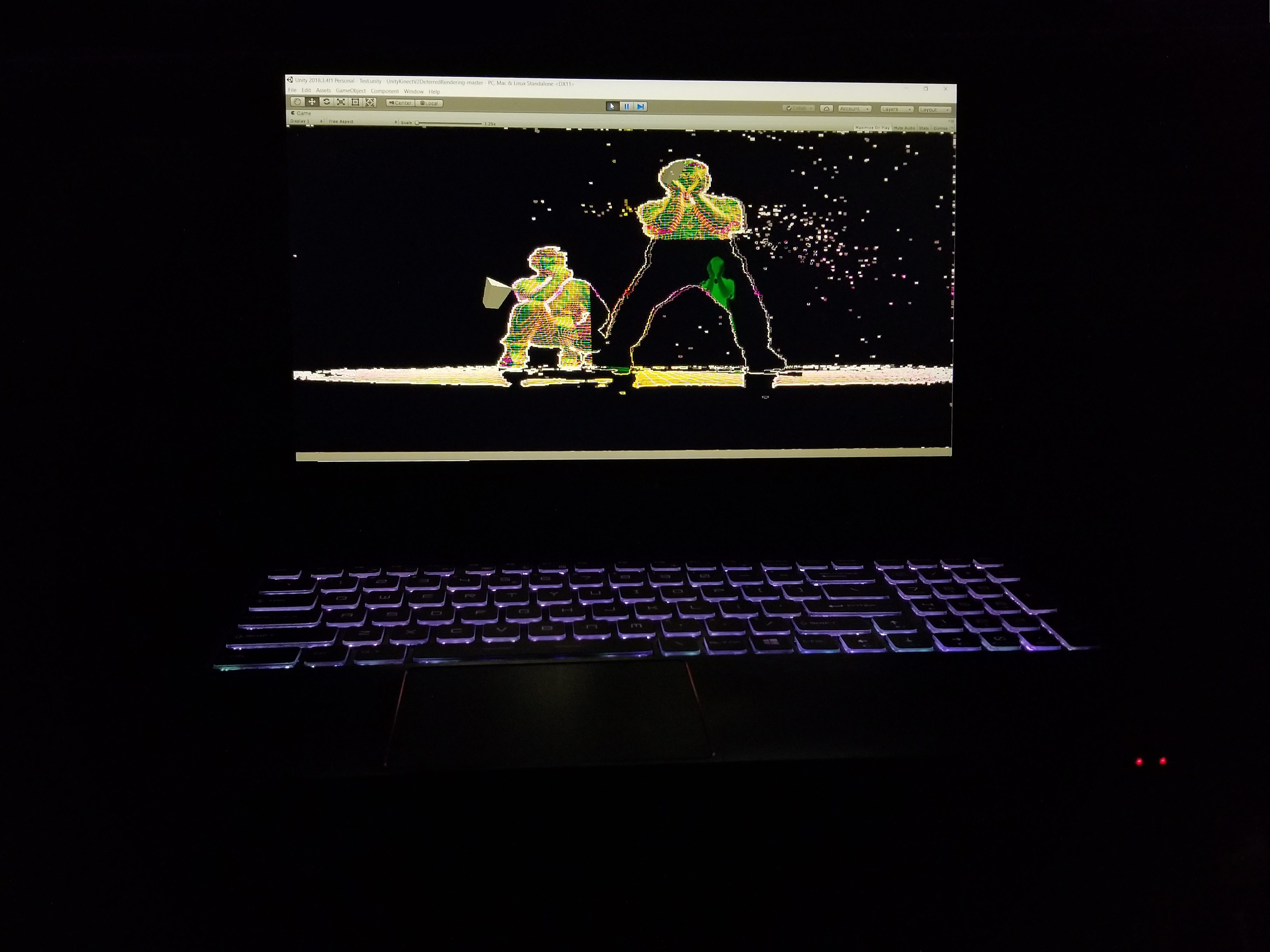

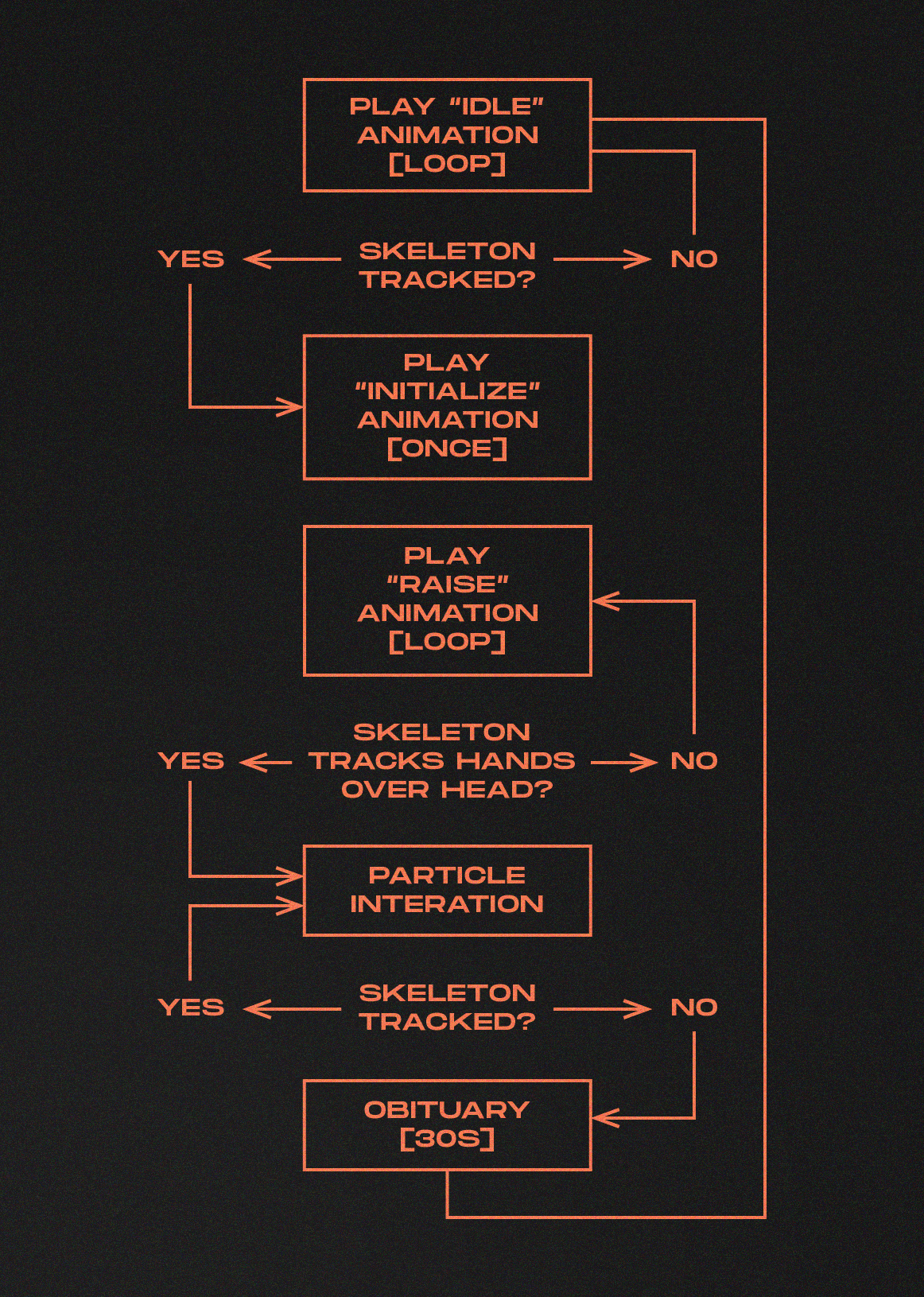

→ Crowd sensing through Kinect or Leap Motion

→ Experience changes with motion of the crowd and the audio input

Stretch Goals

→ Real-time audio

→ Autonomous VJ’ing through AI

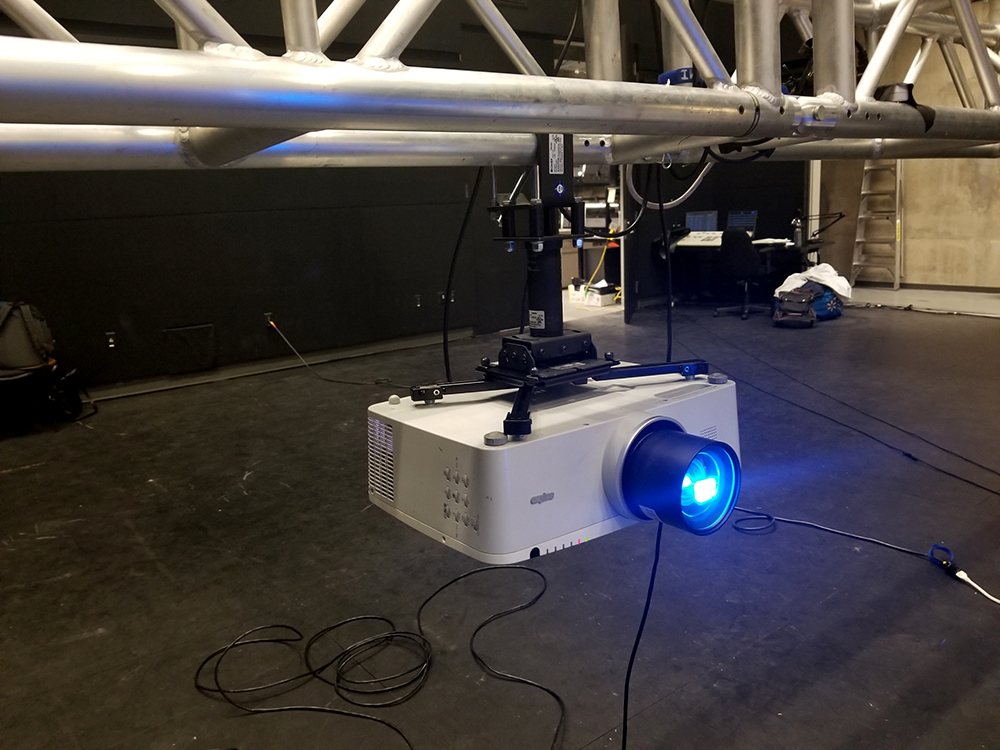

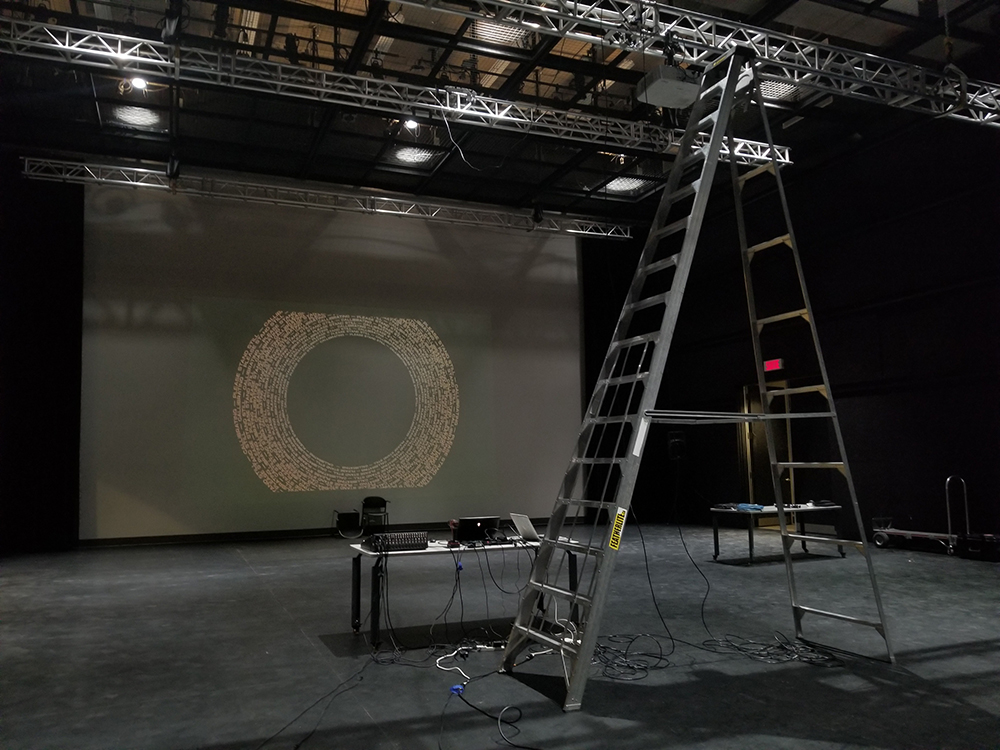

Tech

→ Projectors

→ Good sound system

→ Kinect/or/Leap Motion

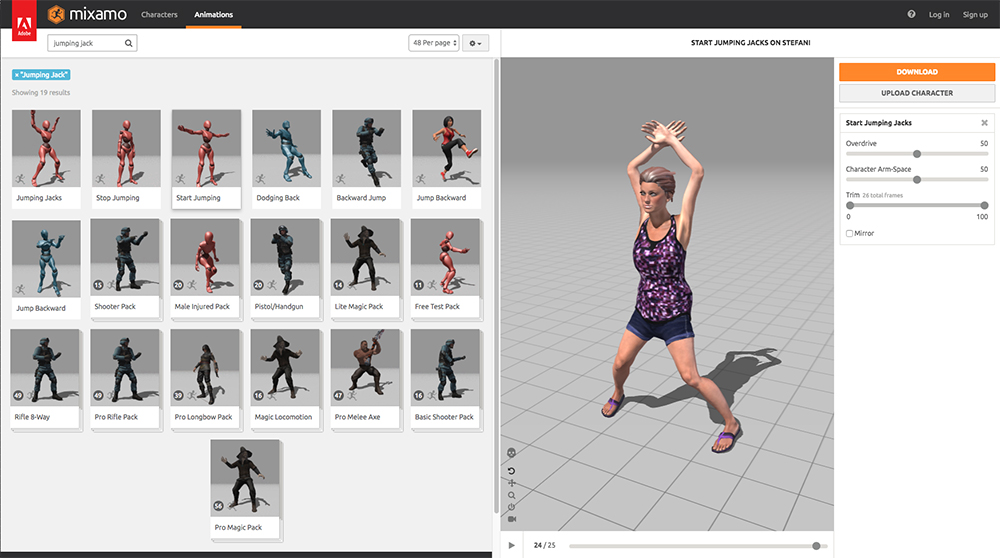

→ Blender

→ Unity+Shader code

→ MAX msp (to be determined)

Resources/references

→ instagram.com/back_down_to_mars

→ radiumsoftware.tumblr.com/post/175304870744

→ radiumsoftware.tumblr.com/post/171008805859

→ docs.depthkit.tv/docs

→ www.youtube.com/watch?v=S8j0gwzY4ns

Premise

→ Large scale projection mapping experience

→ Narrative-based

→ Movie with crowd as participants

→ Crowd sensing through Kinect or Leap Motion

→ Experience changes with motion of the crowd and the audio input

Stretch Goals

→ Real-time audio

→ Autonomous VJ’ing through AI

Tech

→ Projectors

→ Good sound system

→ Kinect/or/Leap Motion

→ Blender

→ Unity+Shader code

→ MAX msp (to be determined)

Resources/references

→ instagram.com/back_down_to_mars

→ radiumsoftware.tumblr.com/post/175304870744

→ radiumsoftware.tumblr.com/post/171008805859

→ docs.depthkit.tv/docs

→ www.youtube.com/watch?v=S8j0gwzY4ns